Spring jdbc template batch insert

Typical scenario: A Customer contacts you to automate a manual process for them in order to speed up their operations. But in order to do this, the new System you have built needs historical data for say 2 years ago. And we're talking of >500k records (I'm not in favour of a System keeping data it doesn't need. For the purpose of this article, let's say the data is needed to compute some aggregates). Let's even say you got this data and it was Customer Orders. You got your team to pre-process this data into a CSV file. Next would be to ingest it into your System.

Using Spring jdbc template batch insert, we can insert multiple rows into the Database with a single write operation. Let's proceed to create a Spring application.

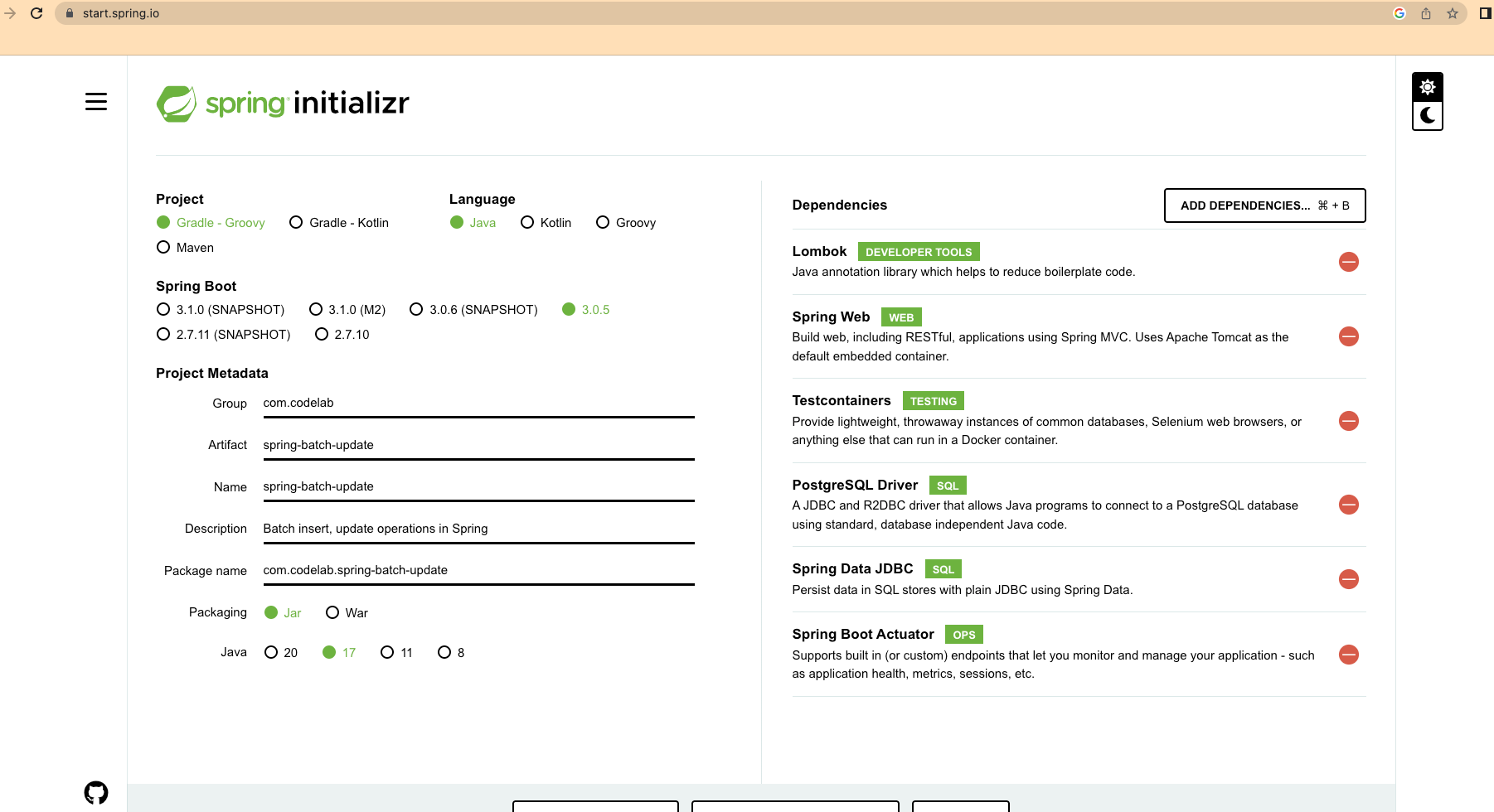

- Head over to start.spring.io to create new project.

2. Create the Table

CREATE SCHEMA IF NOT EXISTS dbuser;

SET search_path TO dbuser; --needed since postgres 15 to not use public schema

CREATE TABLE IF NOT EXISTS customer_order (

id uuid not null,

order_id uuid not null,

customer_id uuid not null,

ordered_at timestamp with time zone not null,

order_status varchar(255),

processed_at timestamp with time zone not null,

product_ids text [] not null,

UNIQUE(order_id, customer_id)

);

3. Write the Service to insert the list of records into the Database using the spring jdbc template.

@RequiredArgsConstructor

@Service

public class CustomerOrderService {

private final JdbcTemplate jdbcTemplate;

private static String QUERY = """

INSERT INTO customer_order (id, order_id, customer_id, ordered_at, order_status, processed_at, product_ids)

VALUES (?, ?, ?, ?, ?, ?, string_to_array(?,','))

ON CONFLICT (order_id, customer_id) DO UPDATE SET product_ids = EXCLUDED.product_ids, order_status = EXCLUDED.order_status

""";

public int[][] handleBatch(List records) {

return jdbcTemplate.batchUpdate(QUERY, records, 128, (ps, item) -> {

ps.setObject(1, UUID.randomUUID());

ps.setObject(2, UUID.fromString(item.getOrderId()));

ps.setObject(3, UUID.fromString(item.getCustomerId()));

ps.setObject(4, Timestamp.valueOf(item.getOrderedAt()).toLocalDateTime()); //at UTC

ps.setObject(5, item.getOrderStatus());

ps.setObject(6, Timestamp.valueOf(item.getProcessedAt()).toLocalDateTime()); //at UTC

ps.setObject(7, item.getProductIds());

});

}

}

In the CustomerOrderService, we set the batch size to 128, so that each row read from the record list will be batched until the batch size before being written to the database. Notice that we added a Unique constraint on the database table for the fields order_id, customer_id. On the second attempt to insert a record with the same order_id, customer_id combination, we get a duplicate key violates constraint error.

When this happens, for us, it means that the record already exists in the database, we want to override certain columns of the matching record with what was read from the CSV we imported. We do this with the UPDATE SET operation, which will hold the row read from the CSV in the EXCLUDED variable, allowing us to access the properties of the EXCLUDED.

As we're using JDBC API, which up till now doesn't allow us to easily pass a Java Array directly to an SQL array column, we do a small trick using the string_to_array postgres function.

The code is available here.

Happy Coding !!!

Member discussion